The SEC delayed Canary Capital’s application for a Litecoin ETF today, opening public comments over the proposal’s compliance with regulatory requirements. The price of LTC fell 5% after the announcement.

The public comment aspect doesn’t appear to signal the Commission’s intentions; this could be a standard delaying tactic. Nonetheless, the market immediately took it as a bearish signal.

Will the SEC Reject Canary’s Litecoin ETF?

A few months ago, analysts proposed that the Litecoin ETF was more likely to win SEC approval than any other altcoin ETF. Its Polymarket odds briefly reached 85% in February, and today’s SEC deadline further spurred community hype.

However, the SEC instead decided to delay this application, including a request for public comments in its notice:

“The Commission seeks and encourages interested persons to provide comments on the proposed rule change. The Commission asks that commenters address the sufficiency of [whether] the proposal… is designed to prevent fraudulent and manipulative acts and practices or raises any new or novel concerns not previously contemplated by the Commission,” it read.

To be clear, it is not positive that this request constitutes a bearish development. The SEC is fielding a lot of altcoin ETF proposals right now, and it recently delayed several.

It even opened public comments for a Litecoin ETF proposal in February. In other words, this might be a standard delaying tactic. Unfortunately, the market hasn’t taken it well.

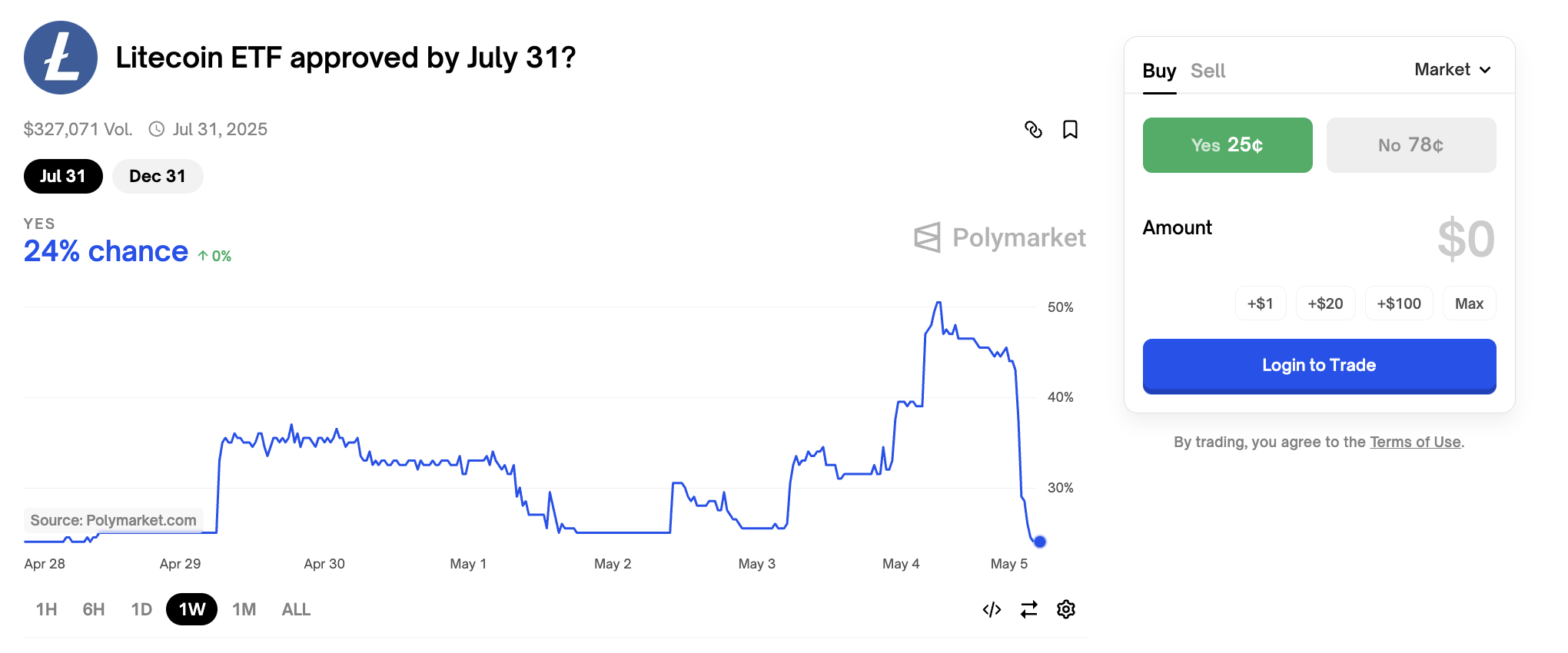

Litecoin’s price fell quickly after the Commission delayed this application, dropping 5% at its lowest point. Polymarket’s odds of a Litecoin ETF approval in Q2 2025 also plummeted, but the chances of a 2025 approval in general remained steady.

The most bullish expectations listed Q2 as a potential time for altcoin ETF approvals, and this bet is now looking rather unlikely.

In other words, things could be a lot worse. James Seyffart, an ETF analyst who predicted the Litecoin delay, didn’t comment on the public comment aspect. It seems like a stretch to claim that the SEC is signaling its intent to refuse this or any other altcoin ETF proposal.

Still, the market can react harshly to such developments in the short term, and traders are repositioning their bets on the altcoin.

The post Litecoin Down 5% After SEC Delays ETF Filing Over Fraud Concerns appeared first on BeInCrypto.